Ensemble Learning Techniques for Enhanced Predictive Accuracy

Welcome to the exciting world of Ensemble Learning Techniques – where diversity isn’t just celebrated, it’s leveraged for maximum predictive accuracy! If you’re ready to take your data analysis game to the next level, buckle up as we delve into the power of combining multiple models to create a supercharged prediction machine. Get ready to unlock the secrets behind Boosting, Bagging, and Stacking techniques that are revolutionizing how we approach predictive analytics. Let’s dive in and explore how harnessing diverse perspectives can lead to unparalleled results in data science!

Types of Ensemble Learning Techniques

When it comes to Ensemble Learning Techniques, there are several approaches that can be utilized to harness the power of diverse models working together. One popular method is boosting, where multiple weak learners are combined sequentially to create a strong predictive model.

Another technique is Bagging, which involves training each model independently and then combining their predictions through averaging or voting. This helps in reducing variance and improving overall accuracy.

Stacking takes ensemble learning a step further by training a meta-model on the predictions of base models. By blending different algorithms together, Stacking aims to capture diverse patterns in the data for enhanced predictive performance.

Each type of ensemble technique brings its own unique strengths and advantages to the table, allowing data scientists to leverage diversity in models for more robust and accurate predictions.

A. Boosting

Boosting is a powerful ensemble learning technique that focuses on building a strong predictive model by combining multiple weak learners. In Boosting, each model learns from the mistakes of its predecessor, continuously improving the overall predictive accuracy.

One of the most popular algorithms used in Boosting is AdaBoost (Adaptive Boosting), which assigns weights to data points and adjusts them at each iteration to give more importance to misclassified samples. This way, AdaBoost creates a sequence of models that work collaboratively to make accurate predictions.

Another well-known Boosting algorithm is Gradient Boosting, where each new model fits the residual errors made by the previous one. Through this iterative process, Gradient Boosting minimizes prediction errors and enhances the overall performance of the ensemble.

Boosting techniques have been successfully applied in various fields such as finance for stock market forecasting and healthcare for disease diagnosis. The key strength of boosting lies in its ability to leverage diverse weak learners to create a robust and accurate predictive model.

B. Bagging

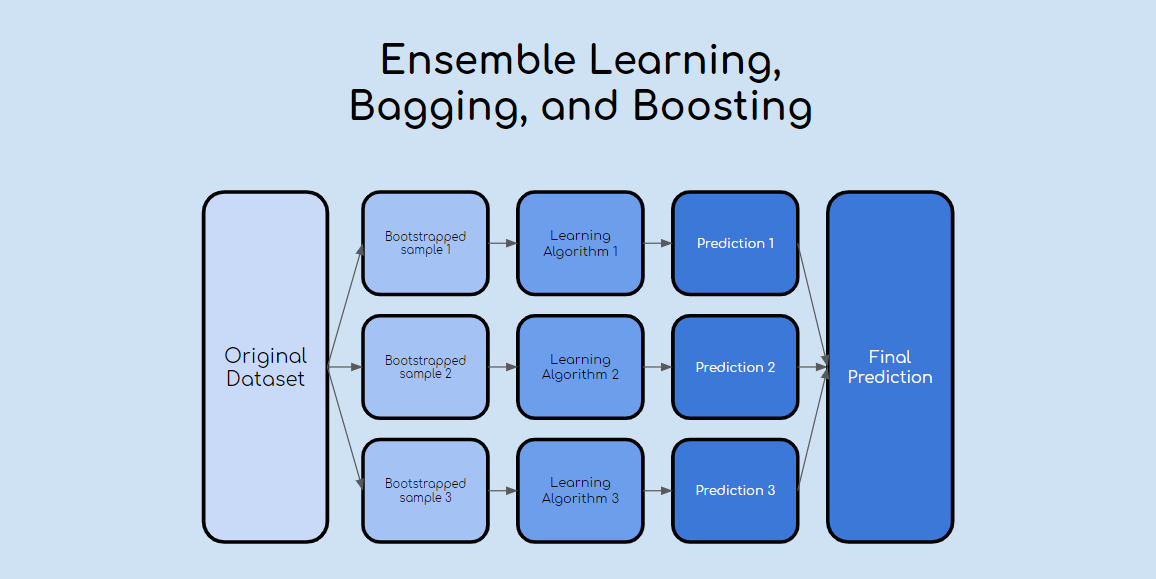

Bagging, short for Bootstrap Aggregating is a powerful ensemble learning technique that involves creating multiple subsets of the training data through random sampling with replacement. Each subset is used to train individual models, and their predictions are combined to make a final decision. The beauty of bagging lies in its ability to reduce variance by averaging out the predictions from diverse models.

By allowing each model to learn on slightly different data, bagging helps improve the overall predictive accuracy and generalization of the ensemble. This technique is particularly effective when used with unstable learners that are sensitive to small changes in the training set. Bagging can be applied across various machine learning algorithms such as decision trees, neural networks, and more.

Bagging offers a robust approach towards building ensembles that can enhance predictive performance by leveraging diversity among individual models within the ensemble.

C. Stacking

Ensemble learning offers a diverse range of techniques to enhance predictive accuracy, and one key method is stacking. Stacking involves combining multiple models to improve performance by blending their predictions. Here’s how it works: instead of using just one base model, stacking leverages the strengths of different algorithms to create a more robust and accurate final prediction.

First, several base models are trained on the dataset independently. Then, a meta-model is introduced to learn how to best combine the predictions from these base models effectively. By allowing each model to focus on its specific area of expertise and then aggregating their outputs intelligently, stacking can often outperform individual models alone.

The beauty of stacking lies in its ability to capture diverse perspectives and insights from various algorithms, resulting in a more comprehensive understanding of the data at hand. This collaborative approach leads to enhanced predictive accuracy and robustness against over fitting – making it a valuable tool in any data scientist’s arsenal when striving for optimal results.

Benefits of Using Ensemble Learning Techniques

Ensemble learning techniques offer a myriad of benefits that can significantly enhance predictive accuracy in machine learning models. By combining multiple base learners, ensemble methods can capture the diverse perspectives and expertise of each individual model. This diversity helps to reduce errors and improve overall performance.

One key benefit of using ensemble learning is its ability to boost predictive accuracy. Through aggregating the predictions of various models, ensemble techniques can provide more accurate outcomes than any single model alone. This increased precision is especially valuable in complex datasets where traditional algorithms may struggle to generalize effectively.

Additionally, ensemble learning methods also exhibit robustness against over fitting. By averaging out the biases and variances present in individual models, ensembles are less prone to making erroneous predictions based on noise or outliers within the data. This helps ensure more reliable and stable results across different scenarios.

Incorporating ensemble learning techniques into your machine learning workflow can ultimately lead to more robust and accurate predictive models with improved generalization capabilities.

A. Improved Predictive Accuracy

Ensemble Learning Techniques offer a powerful way to enhance predictive accuracy in machine learning models. By combining the predictions of multiple individual models, ensemble methods are able to capture more complex patterns and relationships within the data. This leads to more accurate predictions and better overall performance.

One key benefit of using Ensemble Learning is its ability to reduce bias and variance in predictive models. By aggregating diverse sets of predictions, ensemble methods can effectively mitigate errors that may arise from individual models’ shortcomings. This results in more reliable and robust predictions that can generalize well to unseen data.

Moreover, Ensemble Learning leverages the principle of diversity among models – each model brings unique perspectives and strengths to the table. This diversity helps minimize errors caused by noise or outliers in the data, leading to improved predictive accuracy across different scenarios.

In essence, by harnessing the collective intelligence of multiple models through Ensemble Learning Techniques, organizations can achieve higher levels of accuracy in their predictive analytics tasks.

B. Robustness against over fitting

Over fitting is a common challenge in machine learning where a model performs well on training data but fails to generalize to new, unseen data. Ensemble learning techniques, such as bagging and boosting, help address this issue by combining multiple models to make more accurate predictions.

By aggregating the predictions of several individual models, ensemble methods reduce the risk of over fitting. Bagging, for example, builds multiple independent models in parallel and averages their predictions. This diversity in approaches helps create a more stable and reliable prediction model.

Boosting focuses on sequentially improving the performance of weak learners by assigning higher weights to misclassified samples. This iterative process leads to a strong learner that generalizes better to new data while minimizing over fitting tendencies.

Leveraging ensemble learning techniques enhances the robustness of predictive models against over fitting by harnessing the power of diverse algorithms working together harmoniously.

Examples of Successful Applications of Ensemble Learning

Ensemble learning techniques have been leveraged across various industries to achieve remarkable results. In the field of finance, ensemble methods have been used to predict stock market trends with higher accuracy by combining multiple models. This has enabled traders and investors to make more informed decisions based on reliable forecasts.

In healthcare, ensemble learning has proven to be effective in medical diagnosis and disease prediction. By aggregating diverse algorithms, researchers are able to enhance the accuracy of diagnostic systems, leading to early detection and improved patient outcomes.

Moreover, in e-commerce, companies utilize ensemble techniques for recommendation systems that personalize user experiences. By blending different models, businesses can offer tailored product suggestions that cater to individual preferences and behaviors.

These successful applications demonstrate the power of ensemble learning in driving innovation and efficiency across various domains.

Implementing Ensemble Learning in Practice

Implementing Ensemble Learning in practice involves combining multiple machine learning models to improve predictive accuracy. To start, select diverse base learners such as decision trees, support vector machines, and neural networks. Each model should bring a unique perspective to the ensemble.

Next, decide on the type of ensemble method to use – whether boosting for iterative improvement, bagging for parallel training with bootstrapping, or Stacking for blending predictions from different models. Experiment with different combinations and parameters to find the optimal ensemble setup for your specific dataset.

Remember that implementing Ensemble Learning requires more computational resources compared to individual models. It’s crucial to monitor performance metrics closely and fine-tune the ensemble regularly based on real-time feedback.

Implementing Ensemble Learning can be challenging but rewarding when done correctly. It’s about leveraging diversity within your models to create a harmonious predictive powerhouse.

Potential Challenges and How to Overcome Them

As with any advanced technique, implementing ensemble learning can present some challenges along the way. One common hurdle is the complexity of managing multiple models simultaneously. Keeping track of various algorithms and their interactions can be overwhelming for beginners.

Moreover, ensuring that the diverse set of models in an ensemble is complementary rather than redundant requires a deep understanding of each algorithm’s strengths and weaknesses. It may take time to fine-tune the ensemble to achieve optimal performance.

Another challenge is the increased computational resources needed when working with ensembles compared to individual models. Training multiple models simultaneously can strain hardware capabilities and prolong training times.

To overcome these challenges, it’s essential to start small and gradually scale up your ensemble as you gain more experience. Focus on mastering one type of ensemble technique before delving into more complex combinations. Additionally, leveraging tools and libraries specifically designed for ensemble learning can streamline the process and make management easier.

Conclusion: Embracing Diversity for Better Predict

In a world where data-driven decisions are becoming increasingly crucial, ensemble learning techniques offer a powerful solution to enhance predictive accuracy. By combining multiple models, such as boosting, bagging, and stacking, organizations can harness the strength of diversity in their data analysis processes.

The benefits of using ensemble learning techniques are clear: improved predictive accuracy and robustness against over fitting. Successful applications across various industries showcase the effectiveness of these methods in generating more reliable insights from complex datasets.

Implementing ensemble learning in practice may come with challenges like model selection and computational resources. However, by understanding these hurdles and leveraging tools like cross-validation and hyper parameter tuning, organizations can overcome obstacles to achieve optimal results.

Mastering ensemble learning techniques is not just about blending models; it’s about embracing diversity in data analysis. By incorporating varied perspectives and approaches through ensemble methods, businesses can unlock new levels of accuracy and reliability in their predictive modeling efforts. So why settle for one when you can have many? Embrace diversity for better predict today!