Understanding Decision Trees and Random Forests

Welcome to the mysterious world of Decision Trees and Random Forests! These powerful algorithms are like digital forest rangers navigating through data, making choices at each branching point. Let’s venture deep into the heart of these computational forests to uncover their secrets and understand how they shape our predictive models. Embark on this thrilling journey with us as we unravel the magic behind Decision Trees and Random Forests!

What is a Decision Tree?

Imagine a decision tree as a roadmap for making choices. It’s like a flowchart that helps you navigate through different options to reach a specific outcome.

At its core, a decision tree is comprised of nodes and branches. Nodes represent decisions or chance events, while branches symbolize the possible outcomes of each decision.

Each node in the tree leads to subsequent nodes until reaching the final outcome, resembling the branching structure of an actual tree.

Decision trees are used in various fields such as data mining, machine learning, and business analytics to visualize decision-making processes and make predictions based on input variables.

By understanding how decision trees work, individuals can streamline their thought process when faced with complex scenarios by breaking down decisions into manageable steps.

How Does a Decision Tree Work?

Imagine a decision tree as a flowchart for making choices. It starts with a root node, representing the initial question or condition to be assessed. From there, branches out into different nodes based on specific criteria until reaching leaf nodes, which are the final decisions or outcomes.

At each node, the algorithm evaluates features in the data set to determine the best split that separates observations into distinct groups. This process continues recursively until all data points are correctly classified or regressed.

The goal is to create simple yet effective rules for prediction by maximizing information gain at each split. By following this hierarchical structure of decisions, decision trees can handle both categorical and continuous data efficiently.

Understanding how decision trees work provides insight into their interpretability and flexibility in various machine learning tasks.

Advantages and Disadvantages of Decision Trees

Decision trees are known for their simplicity and interpretability. One of the main advantages is that they mirror human decision-making processes, making them easy to understand even for non-technical users. Decision trees can handle both numerical and categorical data without the need for extensive data preprocessing, saving time and effort in the model development phase.

On the flip side, decision trees are prone to over fitting, especially when dealing with noisy or complex datasets. This means they may not generalize well to unseen data, impacting their predictive accuracy. Additionally, decision trees can be sensitive to small variations in the training data, potentially leading to different tree structures with each run.

Despite these drawbacks, decision trees remain a popular choice in machine learning due to their transparency and ease of interpretation.

What is a Random Forest?

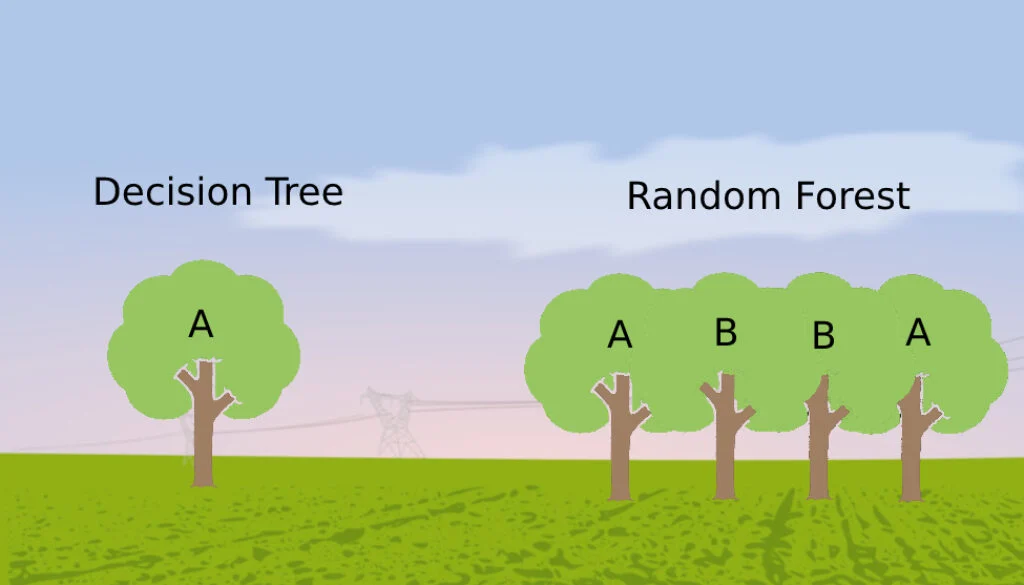

Random Forest is a powerful machine learning algorithm that generates multiple decision trees and combines their outputs to make more accurate predictions.

Unlike a single decision tree, a Random Forest uses an ensemble approach by creating numerous trees and then averaging the results to improve accuracy.

Each decision tree in the forest is built using a random subset of data features, adding diversity to the model and reducing over fitting.

By aggregating the predictions from multiple individual trees, Random Forest can handle complex relationships within data sets more effectively.

This algorithm excels in handling large datasets with high dimensionality and is robust against noise or outliers present in the data.

Random Forests are versatile tools for predictive modeling across various industries due to their ability to provide reliable results.

How Does a Random Forest Work?

Random Forest is a powerful ensemble learning technique that combines the predictions of multiple decision trees to improve accuracy and reduce over fitting. Unlike a single Decision Tree, which can be prone to bias from its training data, Random Forest creates diverse trees by randomly selecting subsets of features for each tree.

Each tree in the forest independently makes a prediction, and then the final output is determined by averaging or voting on these individual predictions. This process helps to mitigate errors and increase the overall predictive performance of the model.

One key advantage of Random Forest is its ability to handle large datasets with high dimensionality effectively. By training multiple trees in parallel, Random Forest can efficiently scale to complex problems without compromising on accuracy.

Additionally, Random Forest has built-in mechanisms for feature selection and handling missing data, making it robust against noisy input variables. These qualities make Random Forest a popular choice for various machine learning tasks where interpretability and performance are both crucial factors in decision-making processes.

Benefits of Using Random Forests for Predictive Modeling

Random Forests offer several benefits when it comes to predictive modeling. One major advantage is their ability to handle large datasets with high dimensionality. This means they can work well with a vast amount of data without over fitting, leading to more accurate predictions.

Moreover, Random Forests are robust against outliers and noise in the data. They aggregate multiple decision trees, which helps reduce the impact of individual errors or anomalies in the dataset on the overall model performance.

Another benefit is that Random Forests provide feature importance rankings, allowing users to understand which variables have the most significant influence on the prediction outcome. This insight can be invaluable for making informed decisions or optimizing future models.

Additionally, Random Forests are versatile and can be applied to various types of problems like classification, regression, and ranking tasks. Their flexibility makes them a popular choice for many machine learning applications across different industries.

Real-World Applications of Decision Trees and Random Forests

Decision trees and random forests are not just theoretical concepts but have tangible applications in various real-world scenarios. In finance, these algorithms are used to assess credit risk by banks and financial institutions. By analyzing variables such as income, credit history, and loan amount, decision trees can predict the likelihood of a borrower defaulting.

In healthcare, decision trees play a crucial role in diagnosing diseases based on symptoms and medical test results. Doctors can utilize these models to make informed decisions about patient care pathways. Random forests are also employed in bioinformatics for gene expression analysis to identify patterns in genetic data that may indicate disease susceptibility or treatment response.

Moreover, e-commerce companies use random forests for personalized product recommendations based on user behavior. By analyzing past purchases and browsing history, businesses can tailor their marketing strategies to individual preferences effectively.

The versatility of decision trees and random forests extends beyond these industries into fields like marketing, manufacturing, and environmental science where predictive modeling is essential for making strategic decisions.

Challenges in Implementing Decision Trees and Random Forests

Implementing decision trees and random forests come with their own set of challenges that data scientists and analysts need to navigate. One common challenge is over fitting, where the model performs exceptionally well on training data but struggles with unseen data due to being too complex. To combat this, hyper parameter tuning and cross-validation techniques are crucial.

Another hurdle is the interpretability of these models, especially in complex decision trees with numerous branches. Explaining the rationale behind a specific prediction can be challenging, limiting trust from stakeholders who value transparency in AI-driven decisions.

Moreover, handling missing data effectively becomes essential as decision trees and random forests do not naturally accommodate missing values during training. Imputing or removing these missing values strategically without introducing bias is key for accurate predictions.

Additionally, scaling computational resources for large datasets can pose a challenge when building random forests, requiring efficient processing power to handle extensive feature sets efficiently. Balancing model complexity with computational constraints remains an ongoing challenge in leveraging these powerful algorithms effectively in real-world scenarios.

Conclusion

Decision trees and random forests are powerful tools in the field of predictive modeling. Understanding how decision trees work and the benefits of using random forests can significantly improve your data analysis and decision-making processes.

By breaking down complex decisions into a series of simple questions, decision trees provide a clear and interpretable framework for understanding data patterns. Random forests, on the other hand, leverage the strength of multiple decision trees to enhance prediction accuracy and reduce over fitting.

Despite their advantages, implementing decision trees and random forests may pose challenges such as model interpretability issues or computational complexity. However, with proper tuning and validation techniques, these obstacles can be overcome to unlock the full potential of these algorithms.

Mastering the concepts behind decision trees and random forests can elevate your predictive modeling skills to new heights. Whether you are analyzing customer behavior or predicting financial trends, incorporating these techniques into your workflow can lead to more accurate predictions and actionable insights. Experiment with different parameters, datasets, and use cases to harness the power of decision trees and random forests in your data science arsenal.